The following story diagram—or Storygram—annotates an award-winning story to shed light on what makes some of the best science writing so outstanding. The Storygram series is a joint project of The Open Notebook and the Council for the Advancement of Science Writing. It is supported in part by a grant from the Gordon and Betty Moore Foundation. This Storygram is co-published at the CASW Showcase.

The paradox of Charles Piller’s remarkable STAT investigation “Law Ignored, Patients at Risk: Failure to Report” is that in order to reveal large swathes of missing data, he had to spend several months crunching enormous datasets to understand what was absent. Before Piller could even sit down to write the sentences of his article, his STAT colleague Natalia Bronshtein had to first write the code to download and analyze the raw information, which she did using the computer programming language Python. The process yielded 200,000 records of clinical trials to analyze, producing a grand total of 18 million data points. There also were the hours Piller spent combing through the spreadsheets to catch any spelling errors that would trip up the analysis—an arduous process that caused him to suffer a repetitive strain injury.

Ultimately, though, the payoff of this work was huge: People had known for years that many researchers and their institutions had often neglected to deposit study results into the public database ClinicalTrials.gov as required by law, but this investigation finally named the worst offenders and offered shocking details about the true scope of the problem. Throughout the story, Piller offers striking examples about how the lack of compliance and enforcement with the law has put human lives at risk. It’s the kind of story that piques your curiosity, and luckily Bronshtein’s accompanying interactive graphic allows you to learn more. The graphic first gives readers a brief explanation of how it works. Then it invites them to interrogate the data themselves by scrolling over specific slices of the sophisticated interactive pie chart. For those who want a direct route to finding out how well a particular institution performed, a drop-down menu on the side provides a fast way to zero in on a specific name. The graphic illustrates how it’s not just a few bad apples; it’s a whole bushel.

Piller and Bronshtein’s work, and the follow-up pieces that came out of this investigation, applied real pressure on the government to fortify its efforts to improve clinical trial data reporting. The government already had plans to begin refining its rules when Piller began his reporting on this subject, but his piece turned up the heat on it to do far better than before. None other than Vice President Joe Biden and Senator Charles Grassley cited the STAT investigation as a reason to improve enforcement of the rules. The influential piece earned Piller and Bronshtein a Gold Award in the online category of the 2016 AAAS Kavli Science Journalism Awards.

I read this piece when it first came out, and it has stuck with me because I was so surprised to find prestigious academic institutions fall so short relative to other institutions. Looking closely at Piller’s article, one sees the careful way he unpacks the unexpected revelations of the investigation for the reader. In the Q&A that follows, Piller tells me about how he methodically structured the piece. He also reflects on what it takes to dive headfirst into this sort of data-driven investigation, and on his love of a good mystery.

Story Annotation

“Law Ignored, Patients at Risk: Failure to Report—a STAT Investigation”

By Charles Piller

Data visualization by Natalia Bronshtein

December 13, 2015

(Reprinted with permission)

Stanford University, Memorial Sloan Kettering Cancer Center, and other prestigious medical research institutions have flagrantly violated a federal law requiring public reporting of study results, depriving patients and doctors of complete data to gauge the safety and benefits of treatments, a STAT investigation has found.Piller’s piece starts off with a bang, and rightfully so. This finding—that some of the biggest offenders are our nation’s most esteemed institutions—grabs the reader by the collar because it is so unexpected. The lede sentence also nods to the impact that the violation has on patients, underscoring the human impact of this finding, which is important in a data-driven piece such as this. Lastly, it makes clear that the forthcoming information in the article is original and exclusive; it signals to the reader that the story will contain new revelations.

The violations have left gaping holes in a federal database used by millions of patients, their relatives, and medical professionals, often to compare the effectiveness and side effects of treatments for deadly diseases such as advanced breast cancer.

The worst offenders included four of the top 10 recipients of federal medical research funding from the National Institutes of Health: Stanford, the University of Pennsylvania, the University of Pittsburgh, and the University of California, San Diego. All disclosed research results late or not at all at least 95 percent of the time since reporting became mandatory in 2008.Numbers can help the reader grasp the enormity of the problem. Rather than just vaguely saying that violation of the law is widespread, this 95 percent figure gives the reader something firm to grip. The sentence also signals how long the regulations have been in place.

Failure to Report: A STAT investigation

What we found: Most research institutions — including leading universities and hospitals in addition to drug companies — routinely break a law that requires them to report the results of human studies of new treatments to the federal government’s ClinicalTrials.gov database.

Read: Law ignored, patients at risk

Drug companies have long been castigated by lawmakers and advocacy groups for a lack of openness on research, and the investigation shows just how far individual firms have gone to skirt the disclosure law.This sentence telegraphs that the piece will explain how institutions have not just ignored the law but done something much more malicious by actively not complying with it. It makes us wonder just how far they went and thus motivates us to keep reading. But while the industry generally performed poorly, major medical schools, teaching hospitals, and nonprofit groups did worse overall — many of them far worse.

The federal government has the power to impose fines on institutions that fail to disclose trial results, or suspend their research funding. It could have collected a whopping $25 billion from drug companies alone in the past seven years. But it has not levied a single fine.This paragraph made my jaw drop. Twenty-five billion dollars is an enormous amount of money. What’s great is that the story provides this hypothetical number. Piller also really drives home the lack of enforcement with a succinct sentence about how no money has been collected to date.

The STAT investigation is the first detailed review of how well individual research institutions have complied with the law.This is important—readers need to know what’s new. This clearly lays that out: The piece will name names (of individual institutions) for the first time.

The legislation was intended to ensure that findings from human testing of drugs and medical devices were quickly made public through the NIH website ClinicalTrials.gov. Its passage was driven by concerns that the pharma industry was hiding negative results to make treatments look better — claims that had been dramatically raised in a major lawsuitContext is so important, and this provides it brilliantly. Here we begin to understand why this law exists in the first place. Giving readers this origin story is important and helps them connect the dots. alleging that the manufacturer of the antidepressant Paxil concealed data that the drug caused suicidal thoughts among teens.

“GlaxoSmithKline was misstating the downside risks. We then asked the question, how can they get away with this?” Eliot Spitzer, who filed the 2004 suit when he was New York attorney general, said in an interview.Spitzer’s voice here helps flesh out the background to this story and explain why lawmakers instituted the regulations, and what they hoped to achieve. He’s also a well-known politician who has endured scandal himself, so people will be intrigued to know he was involved in this.

The STAT analysis shows that “someone at NIH is not picking up the phone to enforce the obligation to report,” Spitzer said. “Where NIH has the leverage through its funding to require disclosure, it’s a pretty easy pressure point.”

NIH Director Dr. Francis Collins acknowledged in a statementIt’s important to include what the institutions in question have to say about these revelations. And there’s no better person to speak for NIH than Collins himself. that the findings are “very troubling.”

Clinical trial reporting lapses visualized

STAT examined data reporting for all institutions – federal agencies, universities, hospitals, nonprofits, and corporations – required to report results to ClinicalTrials.gov for at least 20 human experiments since 2008.OK, now we’re in the meat of the story. This is a good place to tell the reader the nuts and bolts of how the investigation was done. It’s important to include this methodology in the story. This explanation is direct and doesn’t get too technical. It provides just the right amount of detail. This visualization shows the first comparative analysis of their performance. Among the groups, just two companies provided trial results within the legal deadline more than half the time. Most – including the National Institutes of Health, which oversees the reporting system – violated the law the vast majority of the time.

Doctors rely on robust reporting of data to see how effectively a drug or device performs and to understand the frequency of side effects such as suicidal thinking — crucial information for making treatment recommendations.Here the story expands on an earlier point and gives more detail about why compliance with the law matters to the general public. In a data-driven story like this, it’s important to remind the reader at various junctures in the piece that this violation of the law has a real effect on medical care. Researchers depend on prior results to plan future studies and to try to ensure the safety of volunteers who participate in trials.

Yet academic scientists have failed to disclose most results on time — within a year of a study being completed or terminated.

In interviews, researchers, university administrators, and hospital executives said they are not intentionally undermining the law. They said that they are simply too busy and lack administrative funding, and that they disclose some clinical trial results in medical journals and at conferences. Some experts voiced concerns that the researchers might also face pressure from corporate sponsors to delay reports — whether negative or positive — for commercial reasons.We know by now in the story that institutions are performing poorly in terms of compliance. The next logical question is why. This bit helps satisfy that curiosity. In doing so, it doesn’t leave the reader hanging. The reader is getting a payoff here—greater understanding of the issue and the forces at play.

Not much to boast about

A number of trials languishing on researchers’ “to do” piles involve life-and-death problems.I love the stark contrast in this sentence between the banal “‘to do’ piles” and striking “life-and-death problems.” The section opener ups the ante and gets the reader wondering again how this situation ever came to be. Piller is continuously piquing the reader’s curiosity.

Memorial Sloan Kettering failed to report data for two trials of the experimental anticancer drug ganetespib, made by Synta Pharmaceuticals. The results of tests involving breast and colorectal cancer patients showed serious adverse effects in 13 of 37 volunteers. They included heart, liver, and blood disorders, bowel and colon obstructions, and one death.In a data-driven story involving a lot of numbers, examples mean everything. This example is very powerful in that it involves not one but two trials of a drug that a prestigious institution failed to report in a timely way into the database and one in which the study included a death.

The New York City hospital said it delayed its reporting so that it could complete related medical journal articles. It rushed to submit the results to ClinicalTrials.gov only after being contacted by STAT — more than two years late for one of the studies.

Collette M. Houston, who directs clinical research operations for Memorial Sloan Kettering, said she believes the researchers did immediately report adverse events to Synta and the Food and Drug Administration. But a hospital spokesperson said records needed to verify if that happened were no longer accessible.

Houston said that Synta, which paid for the research, normally would alert investigators on other ongoing trials of the same drug about adverse events, so they would learn of the possible added risks to patients despite Memorial Sloan Kettering’s failure to report to ClinicalTrials.gov.

In another case,Piller gives the reader another example. This drives home the important point that it’s not just a single incident, it’s a widespread issue. delayed reporting denied doctors timely information on treating breast cancer. In 2009, the nonprofit Hoosier Cancer Research Network terminated a study of Avastin in 18 patients with metastatic breast cancer. The drug didn’t help and caused trial volunteers serious harm — including hypertension, gastrointestinal toxicity, sensory problems, and pain. But the Indianapolis-based network, which runs trials under contract for drug companies, did not report the results as required the following year — though doctors nationwide were debating whether it was advisable to treat breast cancer patients with Avastin.

In 2011, the FDA revoked its approval of Avastin for breast cancer after determining that it was ineffective for that use and posed life-threatening risks. The Hoosier Network researchers finally published the data in a medical journal in 2013. They have yet to post results on ClinicalTrials.gov — nearly six years after the legal deadline.

The lead investigator, Dr. Kathy Miller of Indiana University School of Medicine, blamed “short staffing” for the reporting delay. “Getting that uploaded was not a big priority,” she said, adding that she had never heard of anyone using ClinicalTrials.gov to examine trial results. She insisted that her study “didn’t have anything to add to that national debate” about Avastin’s safety and efficacy.

STAT identified about 9,000 trials in ClinicalTrials.gov subject to the law’s disclosure requirements. The analysis focused on the 98 universities, nonprofits, and corporations that served as sponsors or collaborators on at least 20 such trials between 2008 and Sept. 10 of this year.Earlier in the piece, Piller described the methodology. This part here gives some payoff and tells the reader that 9,000 trials—a huge number—were identified by STAT’s investigation as subject to the law’s disclosure requirements. This gives some additional vantage on the scale of the issue.

None of the organizations has much to boast about.The use of short, declarative topic sentences such as this help frame the information in a way that’s easy to understand. Piller uses approachable language throughout the piece, which could otherwise have slipped into a difficult-to-digest legalistic tone. Only two entities — drug companies Eli Lilly and AbbVie — complied with reporting requirements more than half the time, a sign of how uniformly the research community flouts the law.

Results from academic institutions arrived late or not at all 90 percent of the time, compared with 74 percent for industry. More than one-fourth of companies did better than all the universities and nonprofits.

The FDA, which regulates prescription drugs, is empowered to levy fines of up to $10,000 a day per trial for late reporting to ClinicalTrials.gov. In theory, it could have collected $25 billion from drug companies since 2008 — enough to underwrite the agency’s annual budget five times over.This $25 billion was alluded to earlier in the piece, but here it is given additional weight by being compared to the FDA’s budget, which it dwarfs. That gives some additional context. Because Piller evolves this information by adding something new, it doesn’t come across as a mere repetition of the number. Instead, it’s a further revelation. But neither FDA nor NIH, the biggest single source of medical research funds in the United States, has ever penalized an institution or researcher for failing to post data.

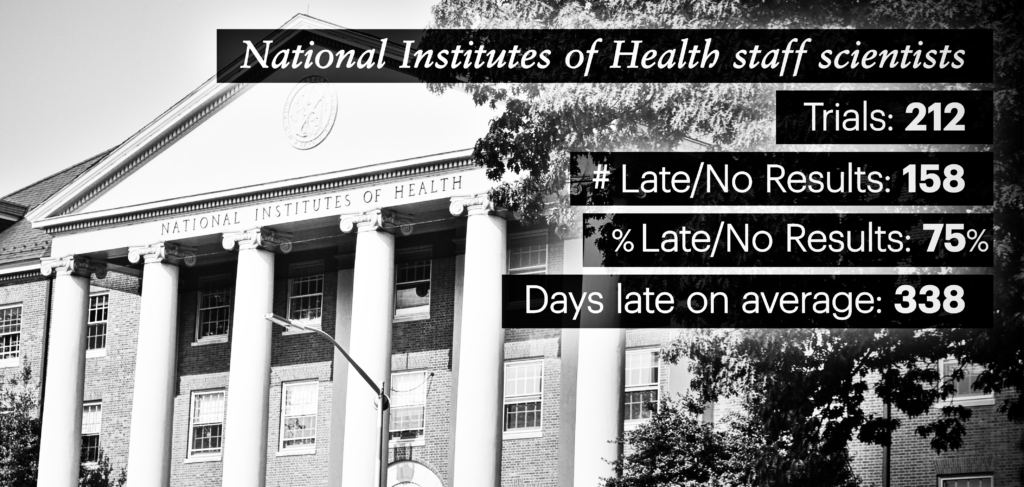

Even the agency’s own staff scientists have violated the reporting law three-quarters of the time.This line stands alone for a reason: It’s a dramatic twist, and counterintuitive.

Collins said NIH research shows reporting rates by both industry and academia have improved over the past several years due to greater awareness, and NIH’s own scientists are now reporting their results about 90 percent of the time. “Of course I would like to see all of these rates reach 100 percent,” Collins said.

Data from ClinicalTrials.gov reviewed by STAT show NIH scientists’ reporting of results within the legal deadline peaked at 38 percent in 2013. Counting results reported late, NIH staff performance reached 90 percent for studies due in 2012 but has dropped since then.

Beginning next spring, after further refinement of ClinicalTrials.gov rules, NIH and FDA will have “a firmer basis for taking enforcement actions,” Collins said. If an NIH grantee fails to meet reporting deadlines, their funding can be terminated, he said.

The agency’s staff scientists, he added, “will be required to follow the law and NIH policy, and there will be consequences … for failing to do so.”

‘Too busy to follow the law’

University researchers and administrators cited a lack of time as one of the reasons they miss reporting deadlines. Lisa Lapin, a top communications official at Stanford, said via email that many faculty members find the process for entering results “above and beyond” their capacity, “given multiple existing constraints on their time” and no funding for additional staff.

Stanford supplied data to ClinicalTrials.gov for 26 of 82 trials for which results were due — far below the performance by most other top universities or companies. Professors supplied data on time for only four studies.

Dr. Harry Greenberg, senior associate dean for research at Stanford, said his institution was determined to improve.

“We will solve it, and we’ll solve it the best way possible,” through steps that include providing support for faculty members to enter trial data, he said.

NIH estimates that it takes, on average, about 40 hours to submit trial results, and many drug and medical device makers have dedicated staff to post data to ClinicalTrials.gov. Eli Lilly has seven full-time employees for the task.One of the logical questions at this point in the piece is, how much effort would it take to comply with the law? Piller satisfies our curiosity by specifying that it takes 40 hours, and then provides another measure (staffing) to illustrate how reporting trial data is not a trivial task. Including this calculation of hours acknowledges the barriers to depositing the information into the database. But it doesn’t excuse the massive lapse. It gives more context and weight to the investigation.

But Collins and advocates for transparency said time demands are no excuse for noncompliance. “There is no ethical justification for ignoring the law,” said Jennifer Miller, a New York University medical ethicist. “It’s not OK to say, ‘I’m too busy to follow the law.’”This quote is perfectly set up by the preceding text and previous paragraph.

Filling information gaps

Six organizations — Memorial Sloan Kettering, the University of Kansas, JDRF (formerly the Juvenile Diabetes Research Foundation), the University of Pittsburgh, the University of Cincinnati, and New York University — broke the law on 100 percent of their studies — reporting results late or not at all.

Dr. Paul Sabbatini, a research executive at Memorial Sloan Kettering, said that as an efficiency measure, his organization previously tried to complete work on scholarly publications in tandem with reporting ClinicalTrials.gov results. “We thought and hoped that we could keep that process bundled,” he said, but preparing journal articles and getting them published often takes years. The hospital will now post to ClinicalTrials.gov independently.At this point, the story has delved deep into the issue of noncompliance. Piller gives the reader the sense here that the story is very much in motion and that his investigative reporting process has already triggered some rethinking among institutions—even before the publication of this piece. Again, this is payoff for the reader.

Several organizations argued that publishing in peer-reviewed medical journals is the highest form of disclosure of study results and should be sufficient, or that there should be a way to simply copy journal data into ClinicalTrials.gov.

Critics say such views reflect the primacy of scholarly articles for academic career advancement more than any inherent superiority over ClinicalTrials.gov.

A 2013 analysis in the journal PLOS Medicine compared 202 published studies against results in ClinicalTrials.gov for the same research. The NIH registry proved far more complete, especially for reporting adverse events — signs that a therapy might be going badly wrong.Piller has noted earlier in the story how some institutions point to published scientific papers as a place they have reported findings. But here he uses a robust research study to show that published papers do not necessarily provide the same breadth of information as the ClinicalTrials.gov data.

Journals often reject papers about small studies or trials stopped early for a range of reasons, such as a drug causing worrisome side effects. They publish relatively few negative results, although failed tests can be as important as positive findings for guiding treatment. ClinicalTrials.gov tries to fill these critical information gaps and serve as a timely and comprehensive registry.

The website is open to the public, unlike many journals that charge a substantial fee.It’s important to make comparisons direct and straightforward. This sentence achieves that. And the site requires data to be entered in a consistent way that allows easier comparisons of benefits or side effects across many studies.

It also lets experts check whether researchers have cherry-picked results, which can mislead doctors and patients.

Preliminary work by The Compare Project, at the Centre for Evidence-Based Medicine at the University of Oxford, suggests that shifts away from outcome measures designated before the start of a clinical trial to results selected at the study’s conclusion are common. The group recently examined reports in leading journals on 44 trials and found that overall, 213 original measures went unreported, while 225 new measures were added.

Withheld data spurred action

Congress passed a law requiring registration of clinical trials in 1997, and the ClinicalTrials.gov database was created in 2000 for this purpose. At first it was little used.Here the story starts to come full circle, but it also delves deeper into the backstory of the law. The lens is widening out so we can get the big picture now that we’ve scrutinized the present.

NYU’s Miller traces the origins of stronger reporting requirements to Spitzer’s Paxil suit.

GlaxoSmithKline disputed the charges but settled out of court. This past September, the medical journal BMJ reanalyzed clinical trial data gathered by the company in 2001 and recently shared with independent scientists. Its findings showed Paxil to be no more effective in teens than a placebo, and linked the drug to increased suicide attempts.

If you’re enjoying this Storygram, also check out two resources that partly inspired this project: the Nieman Storyboard‘s Annotation Tuesday! series and Holly Stocking’s The New York Times Reader: Science & Technology.

Scenarios similar to the Paxil case have been repeated many times since with other drugs.

In the wake of the bird flu scare a decade ago, governments stockpiled billions of dollars worth of the antiviral drug Tamiflu, thought to be a lifesaver. The Lancet reported last year, however, that previously undisclosed trial data showed that Tamiflu “did not necessarily reduce hospital admissions and pulmonary complications in patients with pandemic influenza.”This example shows us that concerns about data reporting persist beyond Paxil, and that new examples have cropped up as recently as last year.

Another notorious case involved an experimental arthritis drug, TeGenero’s TGN1412, tested on six men in London in 2006. All immediately fell gravely ill. One suffered heart failure, blood poisoning, and the loss of his fingers and toes in a reaction that resembled gangrene. A close variant of the drug had been found potentially dangerous years earlier, but the results had never been published.This is an incredibly sad example, but an important one because it was a headline story in 2006 and drives home in a very poignant way how lives may have been saved if the data had been available from the earlier trial.

In response to Spitzer’s suit, GlaxoSmithKline agreed to share much more data with the public. The move prodded competitors and lawmakers to do better.

Then in 2007, a new US law broadened trial registration requirements and added mandates for disclosing summary data, adverse events, and participants’ demographic information on ClinicalTrials.gov.

“Mandatory posting of clinical trial information would help prevent companies from withholding clinically important information about their products,” Senator Charles Grassley, an Iowa Republican and a leading proponent of the law, said at the time.Grassley is another prominent politician, and this quote gives an illustrative snapshot in time in terms of what his hopes were when advocating for the regulations in 2007. “To do less would deny the American people safer drugs when they reach into their medicine cabinets.”

High hopes not realized

The popularity of ClinicalTrials.gov shows progress on lawmakers’ goal of a better-informed public. The site logs 207 million monthly page views and 65,000 unique daily visitors. An NIH survey suggested that 8 in 10 are patients and their family members or friends, and medical professionals and researchers.This is very thorough reporting and great context: Just like with the $25 billion statistic, these figures give a sense of the scale and importance of the issue at hand. Instead of being vague, it drives home the actual, concrete use of ClinicalTrials.gov. The reader gets a sense that this website is big potatoes. With a $5 million budget, the program’s staff of 38, operating at NIH in Bethesda, Md., reviews trial designs and results, provides services, conducts research, and sets policy.

Yet, the lofty hopes for the website have never been realized. More than 200,000 trials are registered on ClinicalTrials.gov, but many drug or device studies are exempted from reporting results, including most trials that have no US test site, those involving nutritional supplements, and early safety trials.

Study sponsors can also request permission to delay reporting on experimental drugs not yet approved by the FDA, and have done so on more than 4,300 occasions.Earlier in the piece, Piller noted that institutions have actively sought to delay reporting data. Here we see this point underscored.

The many exceptions mean that virtually all research organizations — even the most conscientious — have posted results for just a small fraction of their registered trials.

AbbVie, one of the most legally compliant pharmaceutical companies, was involved in 459 registered trials. Just 25 of those required the reporting of results by law. Even for that small number, AbbVie achieved its relatively good reporting record not from exceptional efforts on public disclosure, but by diligently applying for filing extensions.

Biogen delivered results late or not at all 97 percent of the time, the worst record among pharma companies. It said that many of its studies were not covered by the reporting law, and that it had extension requests pending or planned for others — accounting for the poor performance. But STAT did not count trials exempted from the law, nor those that NIH listed as having applied for extensions.

The reporting of results on ClinicalTrials.gov, poor by any reasonable standard, is worse than it appears, some researchers say.The piece up until this point carefully stayed focused on the findings of the investigation, but here Piller widens the lens once again to show that there is much more that is unknown. This uncertainty creates a tension that drives the piece forward.

In part, that’s because there is no easy way to tell whether companies or universities are registering all of their applicable trials, and there is no comprehensive policing mechanism. A research organization that appears conscientious in reporting results might be hiding some trials.

NYU’s Miller studied drugs from major companies approved by the FDA in 2012. In a recent article in the medical journal BMJ Open, she and her coauthors showed that trials subject to the legal requirements often were not registered, and results not provided — even when the firms gave the data confidentially to the FDA during the approval process.

Miller found that results from only about two-thirds of studies used to justify FDA approvals were posted to ClinicalTrials.gov or published elsewhere.

Reporting lapses betray research volunteers who assume personal risks based on the promise that they are contributing to public health, said Kathy Hudson, an NIH deputy director who helps to guide the agency’s clinical trial policies.Here, the piece shines a light on another human cost of late or nonexistent data reporting: the study participants. This helps the layperson understand further why this issue is of importance to people outside the research community. “If no one ever knows about the knowledge gained from a study,” she said in an interview, “then we have not been true to our word.”

Draconian consequences — including FDA and NIH penalties — might prove necessary to improve the distorted evidence base of clinical medicine, said Dr. Ben Goldacre, a fellow at the University of Oxford and cofounder of The Compare Project and Alltrials.net, which advocates for disclosure of clinical research.

As a young doctor, Goldacre broke his leg in a fall while running to treat a cardiac arrest patient. “Nobody has ever broken a leg running down a corridor to try to fix the problem of publication bias,” he said. “That’s because of a failure of empathy.”This kicker works so well on a number of levels. First, it’s an evocative image. You can visualize the scene, with a doctor running down a hall. Second, it showcases the urgency that doctors feel in trying to help patients and compares it to the urgent need to fix clinical trial data gaps. This investigative story has given readers numerous reasons to care about the failure to report data; this kicker heightens the emotion even further.

A Conversation with Charles Piller

Roxanne Khamsi: You’ve reported on so many topics, including biomedicine but also transportation, infrastructure, and prisons. Was there anything different about this particular type of investigation?

Charles Piller: For a story like this one, it didn’t involve situations where I could go and talk to patients or be in the lab and see what was going on firsthand. That’s a little bit unlike a lot of the investigations I’ve done in the past. For example, studying bridge engineering I could go to that bridge and go inside it, do my own photography and even in one case take samples of residue on the bridge and have them tested in a lab. Or, you know, doing an investigation of prison conditions, I could go talk to the prisoners or use other means of talking to them. This is one where you’re just a little bit one step removed, and so the challenge is to bring [the topic] to life.

RK: In recent years we’ve heard a ton about data transparency and how it’s lacking. What made you decide that you wanted to pursue that topic?

CP: What I realized is that, among the academic studies that have been written about this, there’s never any specifics given about how individual institutions are doing. Academics who write these articles are kind of providing a bit of—you might want to call it—professional courtesy not to call out their colleagues who are doing the worst jobs on this. And part of the reason, I think, is that many of these academics have a little bit of a conflict of interest. They want to get a job at those institutions, or they need the goodwill of their peers sometime down the line when applying for a grant.

RK: I want to ask you about the data visualization that’s such a key part of this story. What made this story ripe for an infographic? And why make that element interactive?

CP: That’s a good question. The interactive graphic was designed by my colleague Natalia Bronshtein, who’s our expert on such matters. She’s actually a PhD economist expert in statistics, and also a brilliant designer of informational graphics and interactive graphics. What we were going for was something a little bit different. A lot of times people portraying this sort of data might be tempted to create a chart that shows the rankings of institutions on various criteria. We wanted to stay away from that for a few reasons. One is that virtually all the institutions that we looked at did pretty badly, and creating a chart that ranked them from top to bottom might create an impression, in the reader, that some were doing great and others were doing not so well. What we wanted to do was create a single way of visualizing that gave people the relative performance but allowed the readers to see the details at a glance [using the interactive elements].

RK: It’s very cool. So turning to the text—I was unpacking the lede sentence, which has a lot of layers. You call out institutions, you describe the violation of a federal law, you speak to the effects on patients, and you note that the findings came from an original investigation that you conducted. So it’s really a hard-hitting lede sentence. What was the thought process for crafting this particular lede?

CP: We thought about different ways to do it. In general, particularly for investigative stories, we tend to shy away from anecdotal ledes or backing into what the most important news element of the story is.

RK: Can you explain why?

CP: Well, there’s no hard-and-fast rule about it. But it’s partly we want people to be grabbed right from the beginning in a way that gives them a clear guidepost for where the story’s going. This is a long story; it has different elements, and an interactive graphic. And we want to continually reward the reader from the very beginning with information that’s provocative, that doesn’t require them to wade through stuff to get to the payoff. We telegraph, “Wait, there’s something big and important here and here’s where it starts.” Now—as for the specifics—there is something pretty surprising to most people that some of the most prestigious research institutions in the country are among the worst violators of this sort of federal law.

RK: I was shocked when I read that. I think that’s one of the reasons this stuck with me. Did you have any inkling that the academic institutions would perform so poorly?

CP: Having reviewed the academic literature, I expected academic institutions not to provide the greatest result. What really surprised me was that the leading research institutions in the country were among the worst violators, and that institutions that were among the top recipients of NIH funding were among the worst performers in this very important measure. To their credit, some of them jumped right in to say we’re going to get on this.

RK: I noticed that Eliot Spitzer—who as New York attorney general filed the 2004 lawsuit alleging that the maker of Paxil concealed information that this drug caused some teens to have suicidal thoughts—was the first person you quoted in the piece.

CP: The fact is that he was at the genesis of this. Sometimes writers make the mistake of relying too heavily on people within [the biomedical] world, [such as] scientists and doctors. My feeling is that this is as much about cops as it is about science. And about how you enforce the law. And what better person, in a way, to do this than this guy, Spitzer, who not only himself has been a subject of public attention for awkward difficult things but also has been a cop in a sense?

RK: What was the toughest part of the investigation? Was downloading the information from ClinicalTrials.gov just as easy as pressing a button?

CP: There’s a link; you can download some of the information from ClinicalTrials.gov. But you can’t get everything, which is what we needed: everything. And you can’t get 100 percent of the information without doing additional coding in order to download it all, and I did not personally do that. That was done by my colleague Natalia.

RK: I didn’t know there was code needed to acquire the raw information.

CP: Yeah, there was a lot of programming. It took several weeks to develop the right program to get all the information downloaded from the NIH website.

RK: What were some of the other challenges?

CP: I think anyone who has worked with large datasets knows that all data is dirty, and a huge part of this project—and I’m talking about weeks of full-time effort—was cleaning the data. And it’s because the data are entered by human beings, and they don’t enter it consistently or correctly in every case. If you’re talking about consolidating all of the studies being done by Memorial Sloan Kettering Cancer Center, for example, and someone spells Memorial with two m’s in the middle instead of one, that isn’t easily going to be detected when you’re doing your data crunching. And so the first step that I had to do was to go through and correct all that. Also, a lot of pharmaceutical companies who are in the database have literally hundreds of subsidiaries, and what we needed to do was to consolidate all the studies. That was another gigantically time-consuming piece of the work. [But] I couldn’t interview any of the subjects of the investigation until I knew what the data said, and I couldn’t know what the data said until I completely cleaned it.

RK: Have you always loved looking at this stuff? I’m kind of getting the sense that you love scrutinizing the details, because otherwise you couldn’t make this kind of thing happen.

CP: If you’re not a detail-oriented person who enjoys figuring out this kind of stuff, you shouldn’t be doing this kind of work. It can be frustrating and agonizing at times.

RK: But spending hours checking spelling errors requires so much patience. It’s amazing, really.

CP: Yeah, it’s that the potential payoff—that understanding the data and making sure that it’s correct—can be large. And I’m willing to invest what is, at times, painful hours of work to ensure that I have an opportunity to reveal something important. It’s like a mystery, and I love a mystery.

RK: Do you read mysteries?

CP: Yeah, I do from time to time. But I think what the mystery is here, for me, is will this seemingly endless list of banal information really show what’s important here, and how do you bring it to life for people? To do that you first have to clean it up and make sure it’s correct. I have to say that this is one of those cases where I actually developed a little bit of twinges of repetitive strain injury problems associated with working on these spreadsheets for weeks at a time. So you have to be a bit careful about that. Fortunately, I have good habits, and I’m very careful about it.

RK: What’s a good habit to have if someone’s trying to do the work that you’re doing?

CP: Taking a lot of breaks, that’s very important. When you feel a twinge, stop and rest.

RK: How long did the investigation take?

CP: It took many months. It was about three months of me working full time on it and also obviously the efforts of others, particularly including Natalia, and work by our editors, et cetera.

RK: Can you tell me a little about the kicker quote? What in your eyes made that particular quote so fitting for the end of the piece?

CP: What [it was] for me is that this was a problem that has gigantic real world consequences—as in people die, people get worse treatment, people get delayed treatment as a result of a failure of sharing information that can lead to stronger evidence-based medicine. This quote really drove it home that people need to be as committed to this process of data sharing as they are to helping an individual patient survive in a clinical treatment.

RK: I wonder if you have any tips for readers who are investigative journalists. Do you think it’s vital for them to have a way for people to send them encrypted or anonymous information?

CP: Absolutely. It’s crucial. It’s not required in most cases, but you have to work with [sources’] degree of concern and sometimes the degree of paranoia—and I use that [term] in the most complimentary way. So I have different layers of protections for people and ways for people to get in touch with me depending on what their concerns are. Most people just contact me in a normal way—they call me or they send me emails to my normal account. But I have other email accounts with higher degrees of security and encryption.

RK: I like that you phrase that in terms of how you have to empathize and meet the source at their level of comfort, because you can’t forget that you are half of the equation in terms of how the communications are happening.

CP: Yeah, you have to be careful not to scare people unnecessarily. But at the same time you want to be able to offer them an authoritative and well-thought-through set of options for them to communicate with you that is relatively easy on them and also makes them feel more protected. And so I have those options.

RK: It’s like a restaurant when you have something on the menu but you have something off the menu in case someone comes in and has a specific thing that they need.

CP: Yeah, and you offer it selectively to people. Not everybody needs it or wants it.

RK: The last question I have is about the new rules influenced by your investigation, which went into effect on January 18th. I guess you’re watching to see if they’re enforced?

CP: I think it’s too soon to tell. Basically everything’s in a state of limbo right now regarding FDA and NIH, and those are the two agencies that are at the heart of this issue. And as I wrote, they’d never basically done anything serious to enforce the law. Now they’re going to have an opportunity to do so, because the new rules are in effect, and they could enforce the law with fines and financial penalties if they chose to do so. Those actions will be determined largely by the new people in charge of those agencies, whoever they might be. So we’ll see about it. And we’ll be watching.

Charles Piller, STAT’s West Coast editor, writes watchdog reports and in-depth projects from his base in the San Francisco Bay Area. He previously worked as an investigative journalist for The Sacramento Bee and the Los Angeles Times, and has reported on public health, science, and technology from Africa, Asia, Europe, and Central America. Charles has won numerous journalism awards, has authored two investigative books about science, and also has reported extensively on national security, prison conditions, and bridge engineering. Follow him on Twitter @cpiller.

Natalia Bronshtein, interactives editor, creates data-driven stories for STAT, helping to turn complex information into visualizations that tease out hidden patterns and connections. After earning a doctorate in economics, Natalia conducted postdoctoral research at Brandeis University as a Fulbright Scholar, was a professor at two universities in Russia, and worked at Euromonitor International, a consulting company, where she covered health care and pharmaceuticals, among other industries. Her visualizations have been featured in The Best American Infographics 2016, The Atlantic, The Washington Post, Forbes, Vox, and elsewhere. Follow her on Twitter @ininteraction.

Roxanne Khamsi is a journalist whose work has appeared in Scientific American, Slate, Newsweek, and The New York Times Magazine, and is chief news editor for Nature Medicine. She teaches at Stony Brook University’s Alan Alda Center for Communicating Science. Follow her on Twitter @rkhamsi.